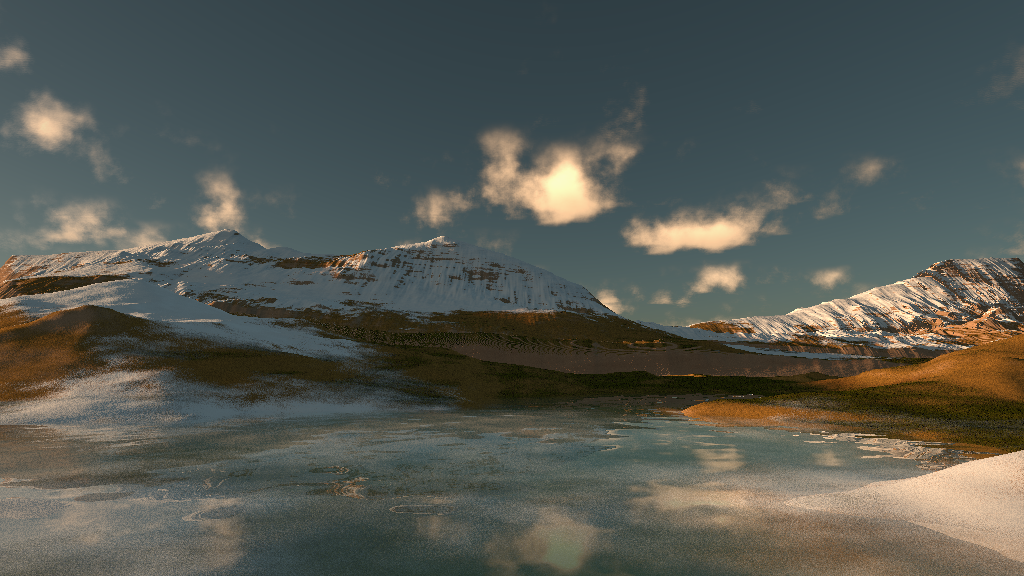

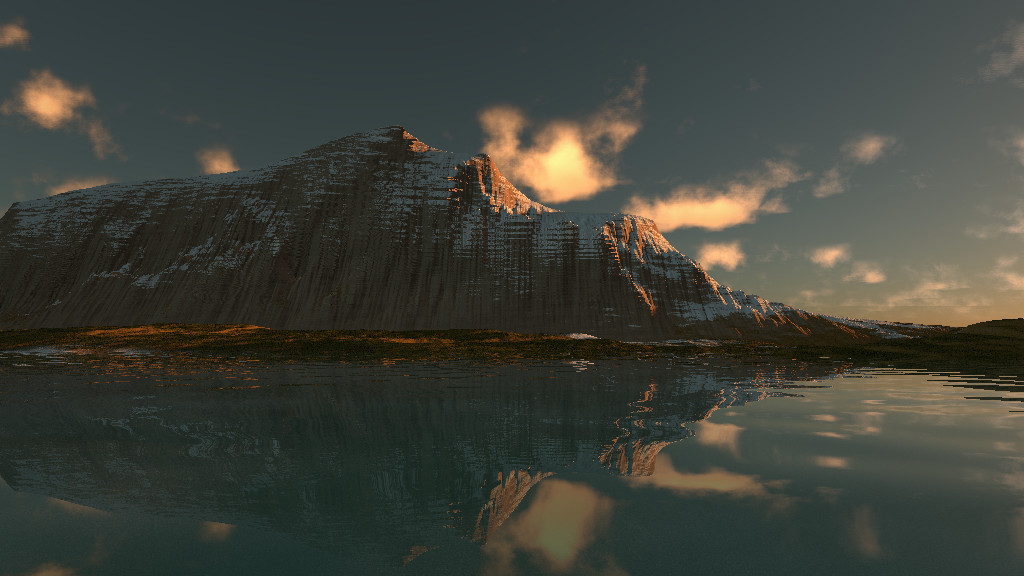

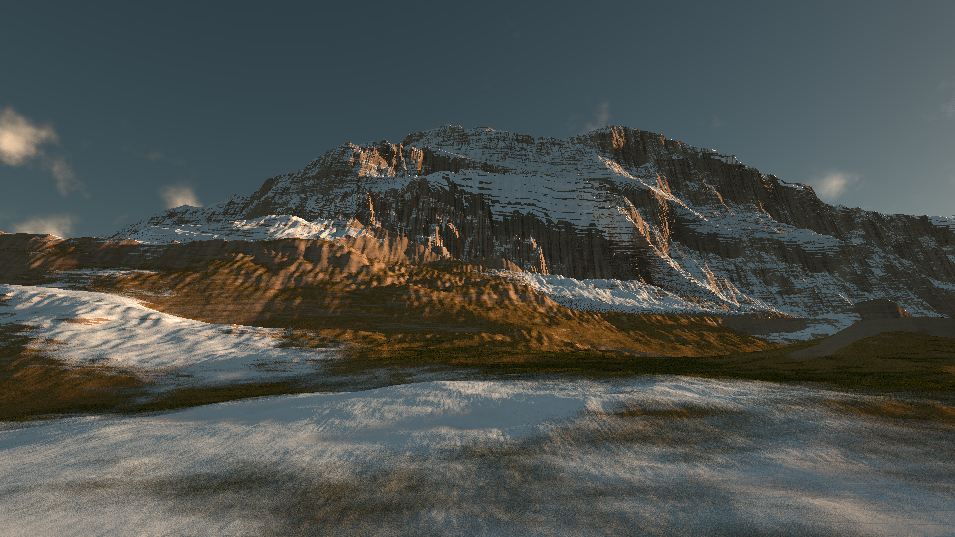

I spent some time playing with an iPad app experiment called Rob Boss. The idea was simple: draw some hills on your screen and watch them transform into a generated 3D landscape.

Even though it never got past version 0.003, I thought it would be fun to share some results and thoughts about it.

How it Works

The app lets you sketch the silhouettes of hills and mountains at different distances from the camera. As you draw these 2D outlines, the app generates a 3D landscape that matches your sketches. You can drag the sun around to change the lighting and adjust the water level by sliding your finger up or down.

The real-time preview uses OpenGL to give immediate feedback while drawing. For the final renders, I implemented a ray-marching render loop on the CPU – which, predictably, runs incredibly slowly. But hey, it works!

Technical Details

The landscape generation is somewhat inspired by terragen-style height field rendering. The app takes your 2D silhouettes and uses them as constraints for the procedural landscape generation. It’s interesting to see how different combinations of silhouettes lead to varying terrain formations.

Future Potential

While I only spent a short time on this experiment, I think the concept has potential. Especially in VR, shaping landscapes with your hands could be pretty compelling. Imagine standing in your generated world while sculpting the mountains around you.